Introducing AI Usage Measurement [Webinar Recording and Summary]

Watch as we unveil a new feature designed to measure the use of AI in your production workflows, when you generate text, images and video. See how it works, why it matters, and how you can use Early Insights to compare options at budget stage. Plus we talk a little about the science behind the measurement, and put AI in context, showing you how CO₂e impacts might vary between in-camera, and fully AI approaches. The session finishes with a Q&A.

0:00 Welcome

2:28 About the feature including our collaboration with Hiili

5:13 Using Early Insights and Final Footprints

8:15 Demo of the feature in the carbon calculator

14:58 How much CO2e does using AI produce? Putting AI use in context

20:00 Case Study: Terre di Santivo (a fully AI piece of content)

29:15 Footprints for AI and in camera variations

34:27 Best practice

36:25 Q&A

56:13 What to do next + close

Here's a little summary of what was covered:

AI Usage was introduced as an activity users can now measure

The webinar introduced AI Usage Measurement as a new, eleventh activity area within the AdGreen Carbon Calculator. This addition enables users to quantify the carbon footprint of AI-generated content, spanning text, image, and video outputs, by calculating the CO2e emissions associated with the electricity consumed for each response. The measurement is powered by Hiili’s scientifically validated methodology, which estimates energy use based on the type of AI model and content generated, then converts this into CO2e using location-specific carbon factors. This approach brings much-needed transparency to the environmental impact of creative AI tools, supporting more informed decision-making for sustainable production.

The Methodology and it’s boundaries were presented

Hiili’s methodology, developed in partnership with Universidad Carlos III de Madrid and led by academic researchers, is grounded in peer-reviewed research and covers the most widely used generative AI models. The system uses a predictive simulator to estimate the electricity (kWh) required for each AI output, but it’s important to note the boundaries: the measurement does not include the CO2e from cloud storage of generated responses, water used in data centre cooling, or the energy consumed during the initial training of AI models. Read about why in our FAQ here.

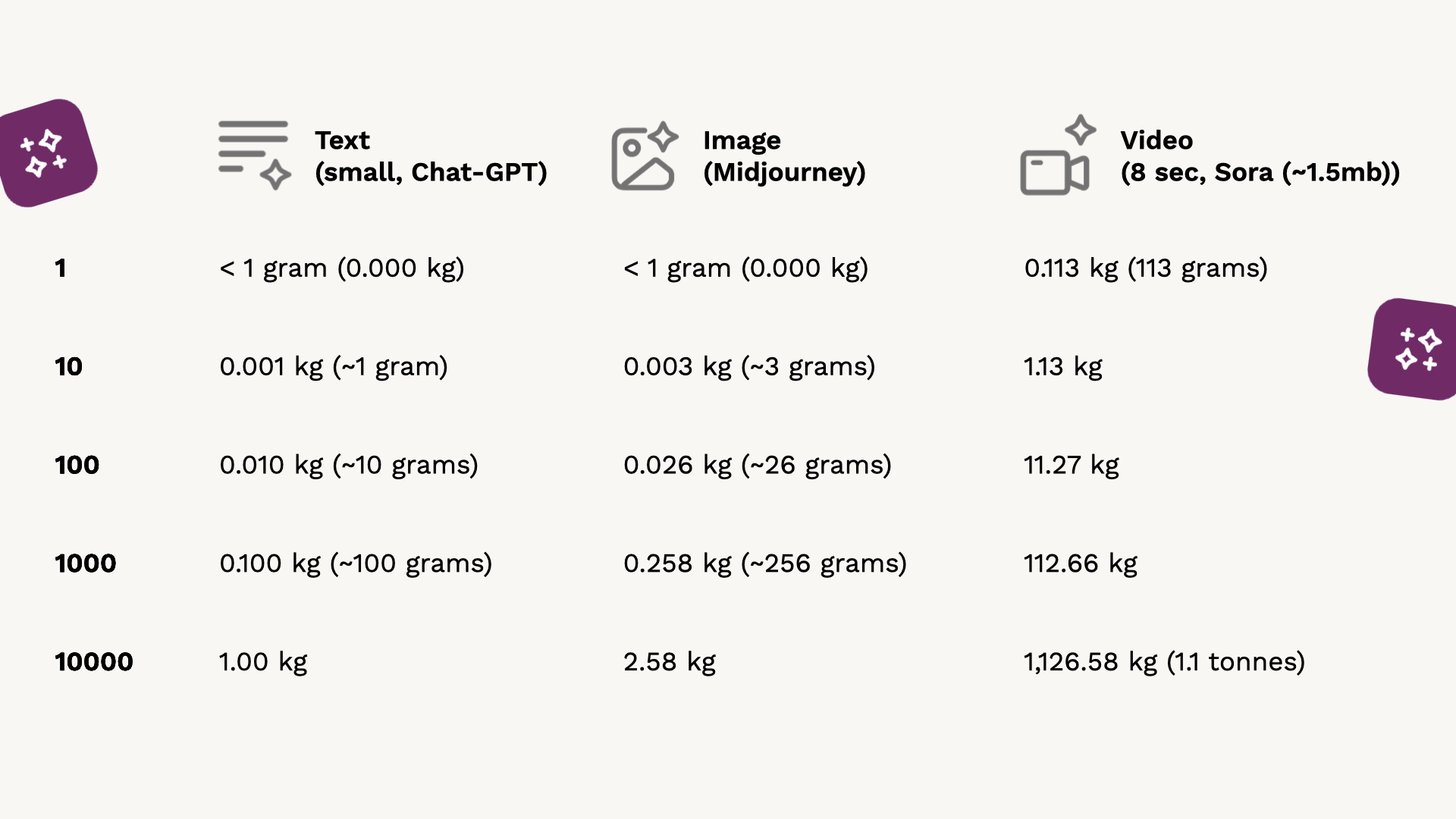

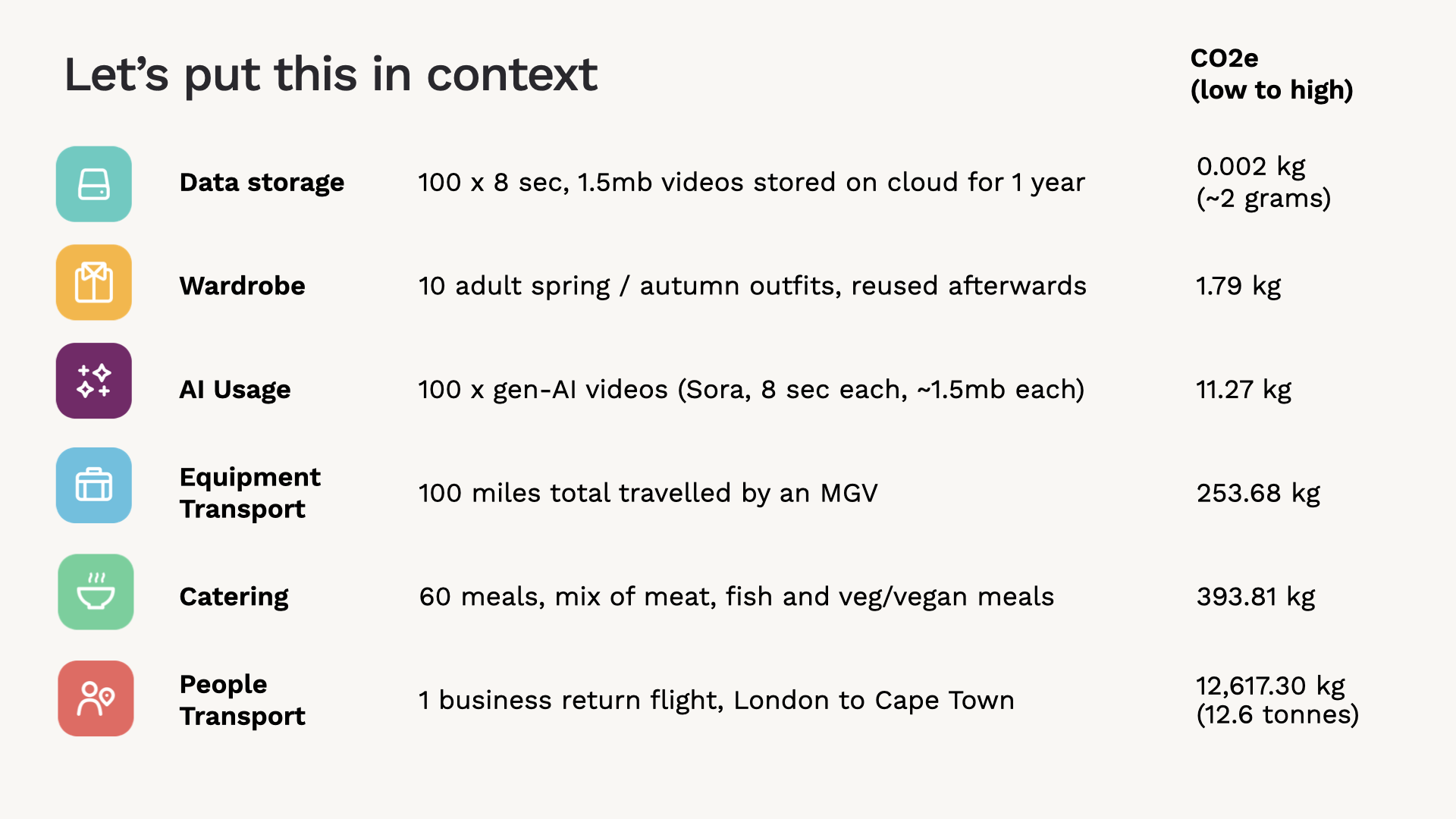

The CO2e from AI Usage was put into context

The session provided practical context for the carbon impact of AI content generation. For example, generating 1,000 short text responses with a model like ChatGPT produces around 100 grams of CO2e, while 1,000 image generations with Midjourney equate to roughly 258 grams. Video generation is significantly more intensive: 1,000 short (8-second) videos using Sora can result in just over 112 kg of CO2e.

These figures were compared with other production activities, such as business flights, catering, and equipment transport, to help those watching understand the relative scale of AI’s footprint within a typical project.

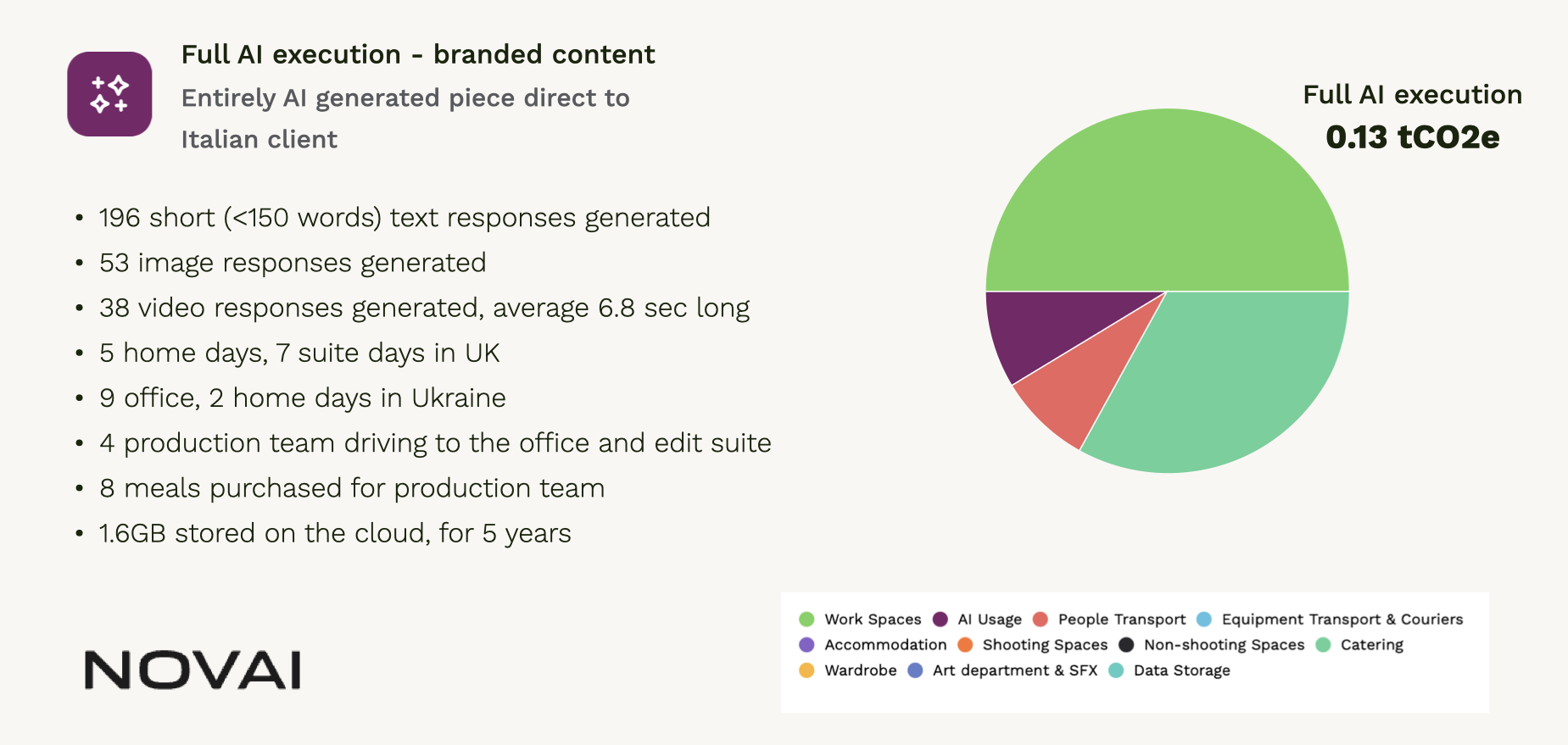

Case Study: AI footprint compared to that of a traditional production

A standout case study contrasted a recently produced, fully AI-executed branded content project, created by Novai, with what a traditional in-camera execution might look like. The AI-driven project, which involved generating nearly 200 text responses, 53 images, and 38 short videos, resulted in a total footprint of just 0.13 tCO2e. In contrast, a comparable in-camera production, requiring international travel, on-location crew, and physical resources, produced 2.18 tCO2e. This highlights the potential for AI to reduce emissions in certain contexts, while also underscoring the importance of considering the full production workflow when making sustainability decisions.

Some useful AI measurement links:

- Find details of the new feature on our news page

- Benchmarks and assumptions used

- FAQ relating to our methodology (including about the things the new feature doesn't measure and why)

- Hiili's methodology paper is here: https://arxiv.org/pdf/2511.05597

- Watch Novai's 40" Terre di Santivo here